Another example how Microsoft Media Foundation can be annoying in small things. So we have this Video Processor MFT transform which addresses multiple video conversion tasks:

The video processor MFT is a Microsoft Media Foundation transform (MFT) that performs colorspace conversion, video resizing, deinterlacing, frame rate conversion, rotation, cropping, spatial left and right view unpacking, and mirroring.

It is easy to see that Microsoft does not offer a lot of DSPs and even less of them are GPU friendly. Video Processor MFT is a “swiss army knife” tool: if takes care of video fitting tasks, in efficient way, in task combinations, being able to take advantage of GPU processing with fallback to software code path, such as known earlier by Color Converter DSP or similar.

Now the main question is, if you are offering just one thing, is there any chance you can do it right?

First of all, the API is not feature rich, it offers just basics via IMFVideoProcessorControl interface. Okay some functionality might not be available in fallback software code path, but it is still the only Direct3D 11 aware conversion component you are offering, so you could still offer more options for those who want to take advantage of GPU-enabled conversions.

With its internal affiliation to Direct3D 11 Video Processor API, it might be worth to mention how exactly the Direct3D API is utilized internally, the limitations and perhaps some advanced options to customize the conversion: underlying API is more flexible than the MFT.

The documentation is not just scarce, it is also incomplete and inconsistent. The MFT does not mention its implementation of IMFVideoProcessorControl2 interface, while the interface itself is described as belonging to the MFT. Although I wrote before that this interface is known for giving some troubles.

The MFT is designed to work in Media Foundation pipelines, such as Media Session hosted and other. However it does take a rocket scientist to realize that if you offer just one thing to the developers for broad range of tasks, the API will be used in various scenarios. Including, for example, use as standalone conversion API.

They should have mentioned in the documentation that the MFT behavior is significantly different in GPU and CPU modes, in for example way the output samples are produced: CPU mode requires the caller to supply a buffer to have output generated to. GPU mode, on the contrary, provides its own output textures with data from internally managed pool (this can be changed, but it’s default behavior). It is fine for Media Session API and alike, but they are also poorly documented so it is not very helpful overall.

I am finally getting to the reason which inspired me to write this post in first place: doing input and output with Video Processor MFT. This is such a fundamental task that it has to have a few words on MSDN on it:

When you have configured the input type and output type for an MFT, you can begin processing data samples. You pass samples to the MFT for processing by using the IMFTransform::ProcessInput method, and then retrieve the processed sample by calling IMFTransform::ProcessOutput. You should set accurate time stamps and durations for all input samples passed. Time stamps are not strictly required but help maintain audio/video synchronization. If you do not have the time stamps for your samples it is better to leave them out than to use uncertain values.

If you use the MFT for conversion and you set the time stamps accurately, it is easy to achieve “one input – one output” behavior. The MFT is additionally synchronous, so it does not require to implement asynchronous processing model: it is possible to consume the API in a really straightforward way: media types set, input 1, output 1, input 2, output 2 etc. – everything within a single thread, linearly. Note that the MFT is not necessarily producing one output sample for every input sample, it is just possible to manage it this way.

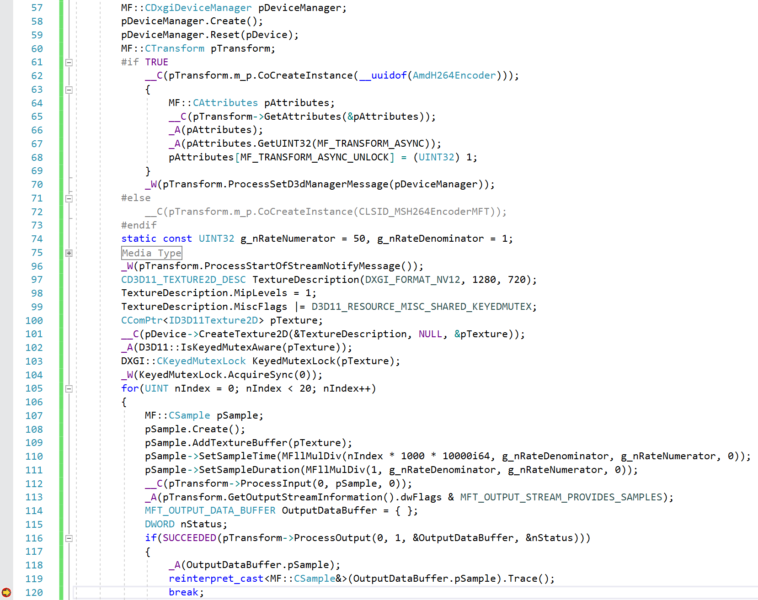

No the linear code snippet looks this way:

However even in such a simple scenario the MFT finds a way to horse around. Even if it finishes to produce output for given input, it still requires an additional IMFTransform::ProcessOutput call just to unlock itself for further input and return MF_E_TRANSFORM_NEED_MORE_INPUT. A failure to do this unnecessary call and receive a failure status results in being unable to feed new input in with respectively MF_E_NOTACCEPTING in IMFTransform::ProcessInput. Even though it sort of matches the documented behavior (for example, in ASF related documentation: Processing Data in the Encoder), where MFT host is expected to request output until it is no longer available, there is nothing on documented contract that prevents the MFT to be friendlier on its end. Given the state and the role of this API, it should have been done super friendly to the developers and Microsoft failed to reach minimal acceptable level of friendliness here.

ATLENSURE_SUCCEEDED(pTransform->ProcessInput(0, pSample, 0));

MFT_OUTPUT_DATA_BUFFER OutputDataBuffer = { };

// …

DWORD nStatus;

ATLENSURE_SUCCEEDED(pTransform->ProcessOutput(0, 1, &OutputDataBuffer, &nStatus));

// …

// NOTE: Kick the transform to unlock its input

const HRESULT nProcessOutputResult = pTransform->ProcessOutput(0, 1, &OutputDataBuffer, &nStatus);

ATLASSERT(nProcessOutputResult == MF_E_TRANSFORM_NEED_MORE_INPUT);